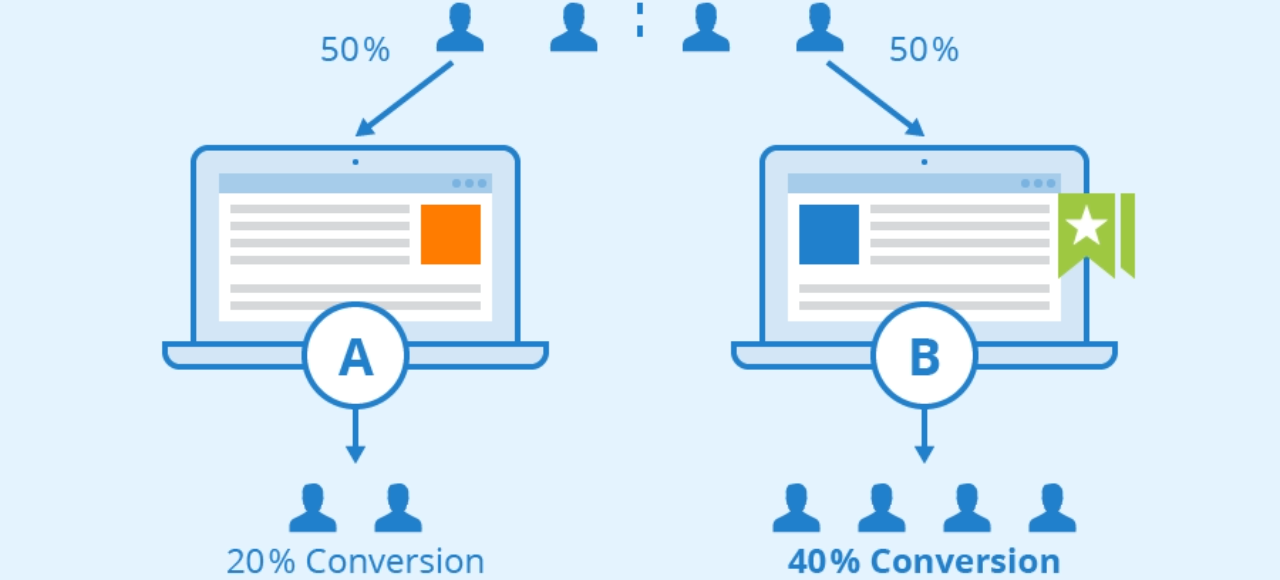

A/B testing in ecommerce is often misunderstood as a set of tactical tweaks, swapping a button color, rotating images, changing a headline, and hoping one version somehow outperforms the other.

But when you look at how high-performing ecommerce brands operate, the logic behind experimentation is very different. They treat tests as systematic learning vehicles, not quick fixes. A well-designed experiment helps a team understand how users behave, which obstacles stop them from buying, and what types of improvements lead to measurable revenue gains.

A/B testing is powerful because it moves decision-making away from personal preferences and toward evidence. When used consistently, it becomes a growth engine that compounds over time.

When (and Why) to Run A/B Tests

A/B testing is most effective when you use it to validate a real hypothesis about user behavior. In practical terms, you test when you expect a change to influence conversion rate, revenue per visitor, average order value, or checkout completion. The purpose isn’t to “see what happens” but to confirm or reject an assumption with measurable impact.

Weak experiments usually share the same issues: testing something with no meaningful upside, launching tests without enough traffic to draw conclusions, or running experiments without considering how the current season affects user behavior. Strong experimentation requires clarity about why you are testing and what change the test should create.

Metrics That Should Be Impacted

To optimize ecommerce sales, you need to understand how tests influence the metrics that matter most:

- Conversion Rate (CR): Measures how many sessions become transactions. Most UX and messaging tests aim here.

- Average Order Value (AOV): Often influenced by pricing, bundling, upsells, and offer structure.

- Revenue Per Visitor (RPV): A comprehensive metric that blends CR and AOV. Ideal when comparing the true impact of two experiences.

- Revenue Per Mille (RPM): Useful for high-traffic stores running paid acquisition. Shows how efficiently each 1,000 visitors are monetized.

A strong test affects at least one of these metrics in a measurable way. If a test can't influence revenue-related outcomes, it seldom justifies the effort.

Most underperforming Shopify stores fail here, not because of traffic, but because they ignore or misread the metrics that reveal real conversion problems, leading to many of the most common Shopify conversion mistakes.

When Not to Test

Not every idea deserves an A/B test. You avoid testing when:

- Traffic is too low to reach significance in a reasonable timeframe.

- The change is too small to matter, such as micro-adjustments that won’t meaningfully shift user behavior.

- There is no clear hypothesis behind the idea.

- External factors like major promotions, shipping delays, or holiday seasonality distort normal patterns.

- A platform update or theme change has recently introduced instability.

Bad tests create noise. If the environment isn’t stable or the expected effect is tiny, you won’t learn anything reliable.

How to Prioritize Tests Intelligently

Every ecommerce team eventually faces the same problem: more test ideas than resources to run them. Prioritization frameworks help maintain discipline so you focus on experiments with enough upside to justify attention.

Prioritization Frameworks (PIE, ICE and Variations)

Frameworks like the Potential, Importance, Ease (PIE) prioritization framework and the Impact, Confidence, Ease (ICE) prioritization framework are among the most commonly used models for deciding which experiments deserve attention first. Both help teams rank ideas by answering a set of structured questions:

- How large is the potential change in behavior?

- How important is the affected page or flow?

- How confident are you in the hypothesis?

- How complex is the implementation?

The objective isn’t to score everything perfectly but to prevent your backlog from becoming a random list. A framework transforms ambiguity into a rational queue.

Choosing Where to Test First

It’s tempting to start with homepage design or new creative. But the most effective starting points usually sit closer to the transaction:

- Checkout

- Product page

- Offer structure

- Pricing and shipping thresholds

Changes in these areas typically influence revenue per visitor far more quickly than cosmetic updates on the homepage. In practice, the priority is testing where both traffic volume and buying intent are concentrated. A 2% lift in checkout completion often outweighs a 15% lift on a page where few users ever convert.

Teams focus on these touchpoints because they sit at the moment where intent turns into revenue. By the time a user reaches a product page or checkout, they’ve already invested attention, evaluated options, and shown clear purchase readiness.

Any friction at this stage, hesitation around price, uncertainty about shipping, unclear product benefits, slow loading, or layout confusion, directly interrupts a conversion that was already within reach.

Optimizing these high-intent pages removes obstacles where they matter most and typically produces measurable lifts in revenue per visitor. Unlike top-of-funnel improvements driven by SEO, these optimizations affect users who are already close to buying, making their impact more immediate and predictable.

There is also a structural reason grounded in traffic distribution and path analysis. While many ecommerce visitors never engage deeply with the homepage or brand storytelling, nearly all purchasers pass through the PDP and checkout. Improving these pages increases the efficiency of the entire acquisition pipeline.

Even small gains cascade across all channels, amplifying returns from paid media, email campaigns, and organic search. This is why mature CRO programs treat PDPs, cart, and checkout as leverage points, they shape the final decision and carry disproportionate influence compared to earlier, lower-intent touchpoints.

Well-Formulated Hypotheses

A/B testing only works when you articulate what you expect to happen and why. A good hypothesis acts as a guidepost through the entire experiment.

Structure of a Strong Hypothesis

A practical, repeatable structure is:

Observed problem → Proposed change → Expected outcome

For example:

Users abandon the checkout when shipping costs appear late in the process. If shipping information becomes visible earlier on the PDP, abandonment should decrease and conversion rate should increase.

This type of hypothesis reflects a common checkout optimization challenge focused on reducing abandonment, where small changes in information timing can have a disproportionate impact on revenue.

A weak hypothesis sounds like:

“We want to test a different banner to see if it works better.”

Strong hypotheses help you diagnose problems and understand causality.

Ecommerce Hypothesis Examples

Here are a few well-structured hypotheses:

- If product images highlight scale more clearly, fewer users will hesitate about size and checkout initiation will increase.

- If the PDP emphasizes social proof higher on the page, skimmers will find reassurance sooner and add-to-cart actions will increase.

- If the store introduces free shipping thresholds earlier in the journey, AOV should rise as users add more items.

Each hypothesis ties directly to a behavioral insight.

Common Ecommerce Test Variables

Products, audiences, and brands differ, but certain elements consistently shape performance. Treat them as levers that materially shift outcomes.

Copy and Microcopy

Titles, descriptions, clarity around guarantees, trust badges, and short explanations near friction points influence decision-making. A single line of microcopy near a form field can reduce hesitation significantly.

Media and Visual Content

Image sequencing, video demonstrations, lifestyle vs studio images, and placement of customer-generated content all affect how users interpret value. High-quality media often improves add-to-cart initiation more reliably than layout shifts.

Layout, Hierarchy and Page Components

Spacing, information order, and section prominence guide attention. Tests in this area often reveal how users naturally scan a page, which elements they ignore, and what interrupts momentum.

Offer, Price and Incentives

Discount presentation, bundles, subscription options, gift-with-purchase incentives, and free shipping thresholds influence both AOV and conversion rate. Small changes in framing sometimes outperform large creative redesigns.

Checkout and Friction

Fewer fields, simpler validation, and clearer delivery expectations tend to produce measurable improvements. Testing friction removal is often one of the clearest paths to optimizing ecommerce sales.

Statistical Control and Significance

Understanding statistical fundamentals prevents misinterpretation. Many teams stop tests too early or assume a minor variance is meaningful.

Why Volume Matters

Significance requires a minimum number of sessions and conversions. When volume is too low, noise overwhelms signal. This is why brands with modest traffic often need to test larger changes rather than micro-adjustments.

Statistical Significance and Reliability

Significance reflects the likelihood that the observed difference is not due to chance. Reliable experiments run for a sufficient duration, long enough to capture weekday and weekend patterns, and reach a confidence threshold before conclusions are drawn.

Audience Segmentation

Segmentation can reveal insights that blended averages conceal. The key is balancing segmentation depth with sample size constraints.

Behavior-Based Testing: New vs Returning Users

Returning users often convert differently because they understand your brand. New users require faster value communication. Testing both segments together can mask performance patterns.

Mobile vs Desktop

Mobile behavior differs considerably. Thumb reach, page length, and scroll patterns all influence how users progress. Sometimes a winning desktop variant underperforms on mobile and vice versa. A deeper look at these patterns is covered in comprehensive Shopify mobile optimization strategies.

Segmentation by Traffic Source

Paid traffic, organic search, influencer traffic, and email traffic rarely behave the same way. Segmenting by acquisition source helps clarify which improvements are universal and which are channel-specific.

Interpreting Results and Next Steps

A winning test doesn’t close the loop; it opens the next one. The real advantage of A/B testing ecommerce experiences comes from understanding why a variation worked and how that learning applies beyond the test itself.

Instead of treating each experiment as a standalone event, high-performing teams view results as signals that reveal how customers think, evaluate products, and navigate friction. This shift in interpretation is what turns testing from a series of isolated wins into a long-term competitive advantage.

Documentation and Learning Repository

Building a repository of experiments is essential for retaining organizational knowledge. A strong repository captures more than the outcome; it records the hypothesis, the reasoning behind the idea, the audience segments involved, external factors influencing behavior, and what the results imply about user intent.

Over time, these patterns become a reference library that guides future prioritization. When your team can review dozens of past insights about PDP clarity, pricing sensitivity, or shipping expectations, new tests stop being guesswork and instead become informed decisions rooted in historical evidence.

The Continuous CRO Cycle

A healthy CRO program evolves continuously. The cycle is simple:

Test → Analyze → Learn → Refine

But its real strength comes from how rigorously it is repeated.

Winning variants usually raise new questions worth exploring. If a new PDP layout lifts conversion, you investigate which specific elements did the heavy lifting. If a checkout hypothesis fails, you analyze whether the friction point was misunderstood or whether another part of the funnel absorbed the impact.

Losses are equally valuable. They reveal where assumptions didn’t align with real user behavior and help refine your mental model of the shopper. When documented properly, they often prevent unnecessary tests in the future. A mature CRO program isn’t defined by the number of winning tests but by how consistently insights inform decisions across merchandising, design, acquisition, and product teams.

A team that interprets results with this mindset builds a knowledge engine, one where every experiment sharpens the next, improvements compound, and ecommerce performance becomes more predictable over time.

Common A/B Testing Mistakes

Some of the most damaging A/B testing mistakes come from misreading data rather than implementing the wrong ideas. One of the biggest issues is testing without enough traffic to reach statistical significance. When the sample is too small, natural fluctuations can look like meaningful results, leading teams to adopt changes that never truly improve performance.

Another common problem is running experiments during unstable periods, such as heavy promotions or sudden spikes in paid traffic. In these moments, user intent shifts for reasons unrelated to the test, and the results become unreliable. Overlapping tests create a similar issue by introducing multiple changes at once, making it impossible to isolate which variable influenced behavior.

Focusing only on conversion rate is another pitfall. A variant that raises CR but lowers AOV can reduce overall revenue. This is why ecommerce teams track broader indicators like revenue per visitor and checkout completion to understand the full impact of each variation.

Finally, many teams end tests too early, mistaking short-lived excitement or novelty effects for genuine improvement. Reliable experiments run long enough to capture normal fluctuations and repeatable patterns. A smaller number of disciplined, well-timed tests will produce far better insights than a high volume of rushed or poorly controlled experiments.

Conclusion

A/B testing becomes a true growth engine when it’s guided by thoughtful hypotheses, prioritized with intention, executed under stable conditions, and interpreted with discipline. It sharpens your understanding of customer behavior and exposes the improvements that genuinely influence revenue. For ecommerce teams committed to long-term growth, experimentation isn’t optional; it’s the foundation of informed decision-making.

For more strategic insights on CRO and ecommerce performance, explore additional content from Vasta.